#@title ## Cross-domain-Cycle-Consistent-CyCADA-CycleGAN-and-HPO v10

Introduction

The driving purpose of this report was to improve the generalization and transferability of learning by leveraging visual information and latent representations, using the latest advancements such as (Rahman 2022) Style-Transfer Deep RL (STDRL), adapted for our final project amongst other methods that “may use visual information and latent representations to improve the generalization and transferability of RL agents. STDRL may use style transfer techniques to generate diverse and realistic visual observations for RL agents from a fixed dataset, which can enhance the data efficiency and robustness of the agents. MLR may use mask-based techniques to reconstruct latent states from partial observations, which can enable the agents to handle occlusions and missing information. STDRL and MLR may be based on the methods proposed by Rahman and Xue (2020; 2022) and adapted to hyperbolic RL settings”; notwithstanding that most STDRL methods “rely on pre-trained models or fixed datasets for style transfer or latent reconstruction, which may limit their applicability to novel domains or tasks”.

STDRL Ref: Rahman, M. M., & Xue, Y. (2022). Bootstrap State Representation using Style Transfer for Better Generalization in Deep Reinforcement Learning. In Proceedings of the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML-PKDD).

Nevertheless, given this report's limited scope, we had to reserve publishing our advanced stage HPO (Hyperparameter Opt.) for such STDRL to the Appendix of the final project, and rather opted instead to explore the foundations of HPO for minimal CNNs and GANs on MNIST variants & Cifar10; before finally building from scratch a Style-Transfer GAN [rather than a Style-Transfer Deep RL] to demonstrate the more generalized application of such promising 1st technology.

Moreover, considering the [needs must] long and thorough set up needed to be later documented as an actual reproducible code towards above-stated objectives; and for the sake of not boring any beginner reader by the complexity of the code without first getting the bigger picture, it was deemed more readable to start the report with this introductory section before initializing both the [code lengthy] manual and TensorBoard-based HPOs.

Therefore, below is both a primer and a walkthrough of the Keras implementation of [Cross-domain Cycle-Consistent] CycleGAN & CyCADA, as understood having had read the valuable Chapter 7 [pages 220-252] of the Atienza, R. (2018). Advanced Deep Learning with TensorFlow 2 and Keras. Packt Publishing:

CycleGAN & CyCADA

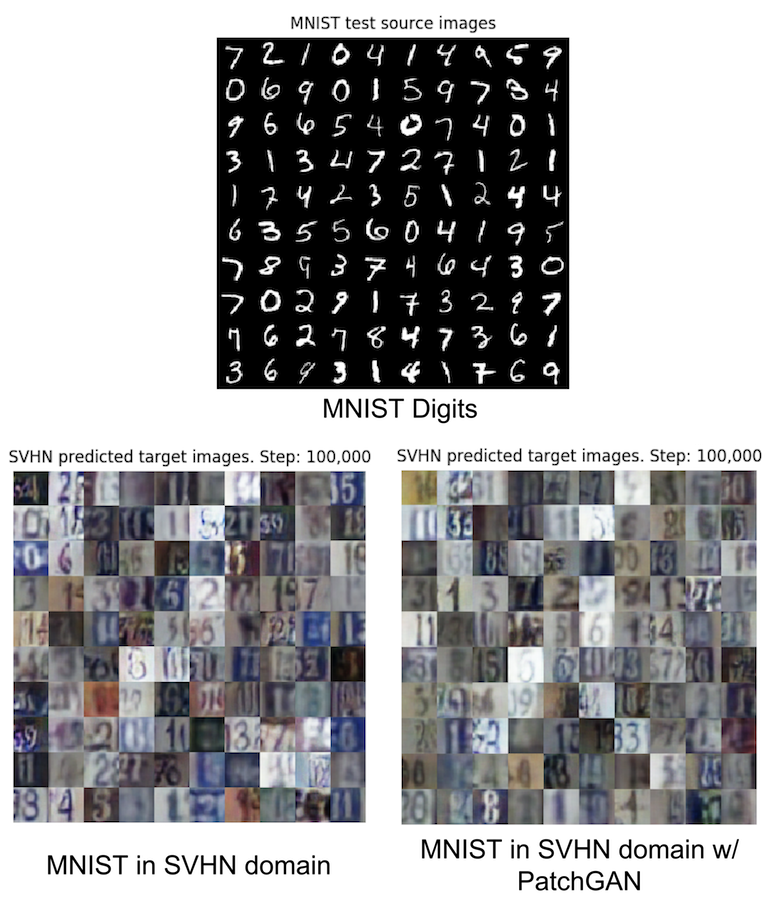

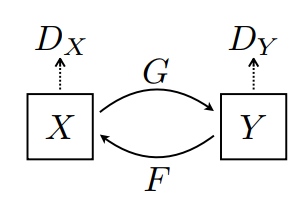

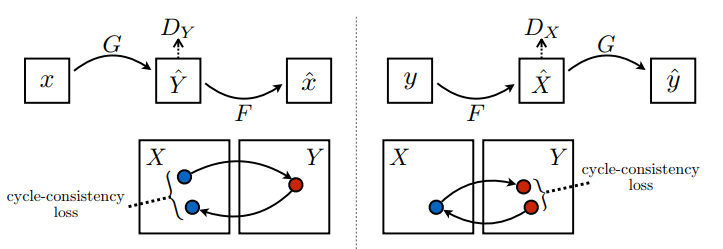

CycleGAN is a technique for translating images from one domain to another without paired examples. It uses two generators and two discriminators that form a cycle of transformations. The generators are U-Networks and the discriminators are decoders with PatchGAN option. The cycle consistency loss ensures that the original image can be reconstructed from the translated image. CycleGAN can be used for various applications such as season translation, style transfer, and object transfiguration.

Ref: (Zhu, 2017) "Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks"

Cross-domain transfer is a term that refers to the process of transforming an image from one form to another, such as changing its style, color, or content. For example, cross-domain transfer can be used to colorize grayscale images, convert satellite images to maps, or make summer photos look like winter. Cross-domain transfer has many practical applications in various fields such as computer vision, computer graphics, image processing, and autonomous driving. In this report, we will introduce cross-domain Generative Adversarial Networks (GANs), which are a type of neural network that can learn to generate realistic images across different domains using adversarial training.

GANs are a type of machine learning model that can generate realistic images, videos, and voices from training data. They consist of two neural networks: a generator that creates new data instances, and a discriminator that evaluates how real they are. One of the applications of GANs is cross-domain translation, which means transforming an image from one domain to another, such as turning a photo into a painting. CycleGAN is a popular algorithm for cross-domain translation that does not require paired training data, unlike other methods such as pix2pix. CycleGAN can learn to translate images between domains using only unpaired collections of images, such as satellite images and maps.

CycleGAN is a method for learning how to translate images from one domain to another without paired examples. It uses two generators and two discriminators that are trained adversarially and cyclically. The generators try to fool the discriminators by producing realistic images in the target domain, while the discriminators try to distinguish between real and fake images. The cycle consistency loss ensures that the generators can also reconstruct the original images from the translated ones. CycleGAN can be used for various image manipulation tasks such as style transfer, photo enhancement, object transfiguration, and season transfer .

CycleGAN is a model that aims to solve the image-to-image translation problem without requiring paired examples of input and output images. For instance, it can learn to colorize grayscale images by using two generators and two discriminators. The generators use a U-Net structure to map the latent representation of the source domain (grayscale) to the target domain (color) and vice versa. The discriminators try to distinguish between real and fake images from each domain. To ensure that the generators preserve the content of the input images, CycleGAN also uses a cycle-consistency loss that penalizes the difference between the original and reconstructed images.

U-Net is a convolutional neural network that was designed for biomedical image segmentation. It has a u-shaped architecture that consists of two parts: an encoder and a decoder. The encoder applies convolutions, ReLUs and max pooling to reduce the spatial dimension and increase the feature information of the input image. The decoder uses upsampling and convolutions to restore the spatial dimension and combine it with feature information from the encoder. The encoder and decoder layers are connected by skip connections, which concatenate the outputs of corresponding layers. This allows the network to preserve spatial information across different resolutions and produce more precise segmentations. U-Net also uses instance normalization instead of batch normalization, which normalizes each image or feature separately. This helps to maintain contrast in style transfer tasks. U-Net can work with fewer training images and handle different types of images, such as satellite or handwritten images. The discriminator of U-Net is similar to a vanilla GAN discriminator, but it can use patchGANs to predict the probability of each patch being real or fake, instead of using a single scalar value for the whole image. This improves parameter efficiency and image quality for the generator.

Finally, "before we can use the CycleGAN to build and train functions, we have to perform some data preparation. The modules cifar10_utils.py and other_utils.py load the CIFAR10 train and test data." After loading, the "train and test images are converted to grayscale to generate the source data and test source data."

HPO (Hyperparameter Opt. Tuning) & TB Dashboard

!pip install -q datasets

import time

import pickle

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Activation, Flatten

from tensorflow.keras.layers import Conv2D, MaxPooling2D

from tensorflow.keras.callbacks import TensorBoard

# pickle_in = open("X.pickle","rb")

# X = pickle.load(pickle_in)

# pickle_in = open("y.pickle","rb")

# y = pickle.load(pickle_in)

# X = X/255.0

(train_images, train_labels), (test_images, test_labels) = datasets.cifar10.load_data()

# Normalize pixel values to be between 0 and 1

# train_images, test_images = train_images / 255.0, test_images / 255.0

X, y = train_images / 255.0, test_images / 255.0

class_names = ['airplane', 'automobile', 'bird', 'cat', 'deer',

'dog', 'frog', 'horse', 'ship', 'truck']

170498071/170498071 [==============================] - 4s 0us/step

assert tf.test.is_gpu_available()

assert tf.test.is_built_with_cuda()

# dense_layers = [0, 1]

# layer_sizes = [32, 64]

# conv_layers = [1, 2]

# dense_layers = [0, 1, 2]

# layer_sizes = [32, 64, 128]

# conv_layers = [1, 2, 3]

dense_layers = [0]

layer_sizes = [64]

conv_layers = [2]

for dense_layer in dense_layers:

for layer_size in layer_sizes:

for conv_layer in conv_layers:

NAME = "{}-conv-{}-nodes-{}-dense-{}".format(conv_layer, layer_size, dense_layer, int(time.time()))

print(NAME)

model = models.Sequential()

# model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)))

model.add(Conv2D(layer_size, (3, 3), input_shape=X.shape[1:]))

model.add(Activation('relu'))

# model.add(layers.MaxPooling2D((2, 2)))

model.add(MaxPooling2D(pool_size=(2, 2)))

# model.add(layers.Conv2D(64, (3, 3), activation='relu'))

# model.add(layers.MaxPooling2D((2, 2)))

# model.add(layers.Conv2D(64, (3, 3), activation='relu'))

for l in range(conv_layer-1):

model.add(Conv2D(layer_size, (3, 3)))

model.add(Activation('relu'))

## model.add(MaxPooling2D(pool_size=(2, 2)))

# model.add(layers.Flatten())

model.add(Flatten())

# model.add(layers.Dense(64, activation='relu'))

for _ in range(dense_layer):

model.add(Dense(layer_size))

model.add(Activation('relu'))

## model.add(Dense(1))

## model.add(Activation('sigmoid'))

model.add(layers.Dense(10))

tensorboard = TensorBoard(log_dir="logs/{}".format(NAME))

## model.compile(loss='binary_crossentropy',

## optimizer='adam',

## metrics=['accuracy'],

## )

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))

# history = model.fit(train_images, train_labels, epochs=10,

# validation_data=(test_images, test_labels))

# history = model.fit(X, y,

# batch_size=32,

# epochs=10,

# validation_split=0.3,

# callbacks=[tensorboard])

history = model.fit(X, train_labels,

epochs=10,

validation_data=(y, test_labels),

callbacks=[tensorboard])

# plt.plot(history.history['accuracy'], label='accuracy')

# plt.plot(history.history['val_accuracy'], label = 'val_accuracy')

plt.plot(history.history['loss'], label='{}-loss'.format(NAME))

plt.plot(history.history['val_loss'], label='{}-val-loss'.format(NAME))

plt.xlabel('Epoch')

plt.ylabel('Loss')

# plt.ylim([0.5, 1]) ## only for limiting ACCURACY y-axis!

plt.legend(loc='upper right')

# test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

test_loss, test_acc = model.evaluate(y, test_labels, verbose=2)

# print(NAME)

print('{}-test-loss'.format(NAME))

print(test_loss)

model.summary()

print('###################')

print()

Epoch 1/10

1563/1563 [==============================] - 21s 5ms/step - loss: 2.6357 - accuracy: 0.1065 - val_loss: 2.3020 - val_accuracy: 0.1001

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2992 - accuracy: 0.1050 - val_loss: 2.3008 - val_accuracy: 0.1034

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2957 - accuracy: 0.1083 - val_loss: 2.3053 - val_accuracy: 0.1050

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2921 - accuracy: 0.1109 - val_loss: 2.3145 - val_accuracy: 0.1047

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2842 - accuracy: 0.1136 - val_loss: 2.3168 - val_accuracy: 0.1049

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2776 - accuracy: 0.1185 - val_loss: 2.3339 - val_accuracy: 0.1052

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2636 - accuracy: 0.1303 - val_loss: 2.3038 - val_accuracy: 0.1316

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.8896 - accuracy: 0.3221 - val_loss: 1.7924 - val_accuracy: 0.3690

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.5310 - accuracy: 0.4602 - val_loss: 1.5549 - val_accuracy: 0.4618

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.3991 - accuracy: 0.5135 - val_loss: 1.5511 - val_accuracy: 0.4764

Epoch 1/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.5843 - accuracy: 0.4276 - val_loss: 1.3019 - val_accuracy: 0.5273

Epoch 2/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1943 - accuracy: 0.5812 - val_loss: 1.1308 - val_accuracy: 0.6083

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.0458 - accuracy: 0.6348 - val_loss: 1.0748 - val_accuracy: 0.6280

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9414 - accuracy: 0.6721 - val_loss: 1.0103 - val_accuracy: 0.6446

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8633 - accuracy: 0.6996 - val_loss: 1.0163 - val_accuracy: 0.6531

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8015 - accuracy: 0.7224 - val_loss: 0.9866 - val_accuracy: 0.6627

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7441 - accuracy: 0.7408 - val_loss: 1.0069 - val_accuracy: 0.6629

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6927 - accuracy: 0.7620 - val_loss: 0.9959 - val_accuracy: 0.6688

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6469 - accuracy: 0.7784 - val_loss: 1.0343 - val_accuracy: 0.6670

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6047 - accuracy: 0.7917 - val_loss: 1.0803 - val_accuracy: 0.6614

313/313 - 1s - loss: 1.0803 - accuracy: 0.6614 - 1s/epoch - 3ms/step

2-conv-64-nodes-0-dense-1679241463-test-loss

1.0802648067474365

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 30, 30, 64) 1792

activation (Activation) (None, 30, 30, 64) 0

max_pooling2d (MaxPooling2D (None, 15, 15, 64) 0

)

conv2d_1 (Conv2D) (None, 13, 13, 64) 36928

activation_1 (Activation) (None, 13, 13, 64) 0

flatten (Flatten) (None, 10816) 0

dense (Dense) (None, 10) 108170

=================================================================

Total params: 146,890

Trainable params: 146,890

Non-trainable params: 0

_________________________________________________________________

###################

### Given the lack of robustness, below is the originally tuned HPO

"""

2-conv-64-nodes-0-dense-1679220205

Epoch 1/10

1563/1563 [==============================] - 21s 5ms/step - loss: 2.6045 - accuracy: 0.1186 - val_loss: 2.3005 - val_accuracy: 0.1067

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.3001 - accuracy: 0.1160 - val_loss: 2.3030 - val_accuracy: 0.1015

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2984 - accuracy: 0.1060 - val_loss: 2.3062 - val_accuracy: 0.1239

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2910 - accuracy: 0.1149 - val_loss: 2.2955 - val_accuracy: 0.1244

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2590 - accuracy: 0.1394 - val_loss: 2.0051 - val_accuracy: 0.2812

Epoch 6/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.8516 - accuracy: 0.3294 - val_loss: 1.6329 - val_accuracy: 0.4263

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.4937 - accuracy: 0.4750 - val_loss: 1.5108 - val_accuracy: 0.4740

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.0614 - accuracy: 0.6286 - val_loss: 1.0904 - val_accuracy: 0.6148

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9518 - accuracy: 0.6678 - val_loss: 1.0373 - val_accuracy: 0.6348

Epoch 5/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8739 - accuracy: 0.6973 - val_loss: 1.0326 - val_accuracy: 0.6416

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8003 - accuracy: 0.7217 - val_loss: 1.0316 - val_accuracy: 0.6495

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7401 - accuracy: 0.7433 - val_loss: 1.0059 - val_accuracy: 0.6638

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.6768 - accuracy: 0.7649 - val_loss: 1.0160 - val_accuracy: 0.6638

Epoch 9/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.6289 - accuracy: 0.7825 - val_loss: 1.0247 - val_accuracy: 0.6668

Epoch 10/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.5761 - accuracy: 0.8008 - val_loss: 1.0904 - val_accuracy: 0.6602

313/313 - 1s - loss: 1.0904 - accuracy: 0.6602 - 781ms/epoch - 2ms/step

2-conv-64-nodes-0-dense-1679220205-test-loss

1.090354561805725

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

conv2d (Conv2D) (None, 30, 30, 64) 1792

activation (Activation) (None, 30, 30, 64) 0

max_pooling2d (MaxPooling2D (None, 15, 15, 64) 0

)

conv2d_1 (Conv2D) (None, 13, 13, 64) 36928

activation_1 (Activation) (None, 13, 13, 64) 0

flatten (Flatten) (None, 10816) 0

dense (Dense) (None, 10) 108170

=================================================================

Total params: 146,890

Trainable params: 146,890

Non-trainable params: 0

_________________________________________________________________

###################

"""

print()

HPO Result Interpretation

The tuned hyperparameter of 8 epochs with such 2-Conv-64-Nodes-0-Dense architecture shows a promising generalisation, given that above Val_Loss plot clearly proofs that only after 8 epochs overfitting satarts to creep in!

#@title ### Additional Shallower Sweep

dense_layers = [0, 1]

layer_sizes = [32, 64]

conv_layers = [1, 2]

"""

1-conv-32-nodes-0-dense-1679223061

Epoch 1/10

1563/1563 [==============================] - 7s 4ms/step - loss: 3.2266 - accuracy: 0.2638 - val_loss: 2.0506 - val_accuracy: 0.2697

Epoch 2/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.8387 - accuracy: 0.3696 - val_loss: 1.8593 - val_accuracy: 0.3550

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.6988 - accuracy: 0.4188 - val_loss: 1.7698 - val_accuracy: 0.4108

Epoch 4/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.6267 - accuracy: 0.4455 - val_loss: 1.7867 - val_accuracy: 0.4270

Epoch 5/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.5741 - accuracy: 0.4632 - val_loss: 2.1164 - val_accuracy: 0.3472

Epoch 6/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.5624 - accuracy: 0.4655 - val_loss: 1.8725 - val_accuracy: 0.4251

Epoch 7/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.4899 - accuracy: 0.4947 - val_loss: 1.9966 - val_accuracy: 0.3945

Epoch 8/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.4815 - accuracy: 0.4920 - val_loss: 2.0890 - val_accuracy: 0.3607

Epoch 9/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4473 - accuracy: 0.5030 - val_loss: 2.0184 - val_accuracy: 0.4416

Epoch 10/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4218 - accuracy: 0.5156 - val_loss: 2.1320 - val_accuracy: 0.4223

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.5610 - accuracy: 0.1055 - val_loss: 2.2902 - val_accuracy: 0.1140

Epoch 2/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2968 - accuracy: 0.1099 - val_loss: 2.2992 - val_accuracy: 0.1153

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2948 - accuracy: 0.1100 - val_loss: 2.3059 - val_accuracy: 0.1129

Epoch 4/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.1075 - accuracy: 0.2181 - val_loss: 1.7849 - val_accuracy: 0.3646

Epoch 5/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.5749 - accuracy: 0.4373 - val_loss: 1.5402 - val_accuracy: 0.4535

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3788 - accuracy: 0.5126 - val_loss: 1.4532 - val_accuracy: 0.4913

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.2622 - accuracy: 0.5573 - val_loss: 1.4423 - val_accuracy: 0.5089

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1782 - accuracy: 0.5897 - val_loss: 1.4288 - val_accuracy: 0.5280

Epoch 9/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.1126 - accuracy: 0.6118 - val_loss: 1.5047 - val_accuracy: 0.5073

Epoch 10/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0668 - accuracy: 0.6272 - val_loss: 1.5209 - val_accuracy: 0.5236

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.5852 - accuracy: 0.4312 - val_loss: 1.3073 - val_accuracy: 0.5311

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2374 - accuracy: 0.5662 - val_loss: 1.2219 - val_accuracy: 0.5698

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1051 - accuracy: 0.6134 - val_loss: 1.1466 - val_accuracy: 0.5974

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0263 - accuracy: 0.6443 - val_loss: 1.0894 - val_accuracy: 0.6240

Epoch 5/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9605 - accuracy: 0.6652 - val_loss: 1.0808 - val_accuracy: 0.6267

Epoch 6/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.9065 - accuracy: 0.6849 - val_loss: 1.0372 - val_accuracy: 0.6401

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8664 - accuracy: 0.6996 - val_loss: 1.0409 - val_accuracy: 0.6426

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8331 - accuracy: 0.7123 - val_loss: 1.0649 - val_accuracy: 0.6345

Epoch 9/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8046 - accuracy: 0.7212 - val_loss: 1.0298 - val_accuracy: 0.6464

Epoch 10/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.7737 - accuracy: 0.7307 - val_loss: 1.0848 - val_accuracy: 0.6356

313/313 - 1s - loss: 1.0848 - accuracy: 0.6356 - 714ms/epoch - 2ms/step

2-conv-32-nodes-0-dense-1679223211-test-loss

1.0848363637924194

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_3 (Conv2D) (None, 30, 30, 32) 896

activation_3 (Activation) (None, 30, 30, 32) 0

max_pooling2d_2 (MaxPooling (None, 15, 15, 32) 0

2D)

conv2d_4 (Conv2D) (None, 13, 13, 32) 9248

activation_4 (Activation) (None, 13, 13, 32) 0

flatten_2 (Flatten) (None, 5408) 0

dense_2 (Dense) (None, 10) 54090

=================================================================

Total params: 64,234

Trainable params: 64,234

Non-trainable params: 0

_________________________________________________________________

###################

1-conv-64-nodes-0-dense-1679223366

Epoch 1/10

1563/1563 [==============================] - 8s 4ms/step - loss: 4.1663 - accuracy: 0.3002 - val_loss: 1.8713 - val_accuracy: 0.3476

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3232 - accuracy: 0.5361 - val_loss: 1.2543 - val_accuracy: 0.5627

Epoch 3/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.1527 - accuracy: 0.5986 - val_loss: 1.1820 - val_accuracy: 0.5861

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0468 - accuracy: 0.6366 - val_loss: 1.1069 - val_accuracy: 0.6148

Epoch 5/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.9579 - accuracy: 0.6703 - val_loss: 1.0935 - val_accuracy: 0.6221

Epoch 6/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.8937 - accuracy: 0.6943 - val_loss: 1.0904 - val_accuracy: 0.6271

Epoch 7/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.8393 - accuracy: 0.7122 - val_loss: 1.0626 - val_accuracy: 0.6310

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.7912 - accuracy: 0.7294 - val_loss: 1.1285 - val_accuracy: 0.6227

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.7524 - accuracy: 0.7417 - val_loss: 1.0879 - val_accuracy: 0.6349

Epoch 10/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.7113 - accuracy: 0.7559 - val_loss: 1.0917 - val_accuracy: 0.6444

313/313 - 1s - loss: 1.0917 - accuracy: 0.6444 - 738ms/epoch - 2ms/step

1-conv-64-nodes-0-dense-1679223366-test-loss

1.0916939973831177

Model: "sequential_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_5 (Conv2D) (None, 30, 30, 64) 1792

activation_5 (Activation) (None, 30, 30, 64) 0

max_pooling2d_3 (MaxPooling (None, 15, 15, 64) 0

2D)

flatten_3 (Flatten) (None, 14400) 0

dense_3 (Dense) (None, 10) 144010

=================================================================

Total params: 145,802

Trainable params: 145,802

Non-trainable params: 0

_________________________________________________________________

###################

2-conv-64-nodes-0-dense-1679223501

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 2.0994 - accuracy: 0.3383 - val_loss: 1.5247 - val_accuracy: 0.4465

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3994 - accuracy: 0.5065 - val_loss: 1.3174 - val_accuracy: 0.5333

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2483 - accuracy: 0.5643 - val_loss: 1.2766 - val_accuracy: 0.5576

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1385 - accuracy: 0.6059 - val_loss: 1.3041 - val_accuracy: 0.5660

Epoch 5/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0720 - accuracy: 0.6291 - val_loss: 1.3472 - val_accuracy: 0.5599

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0017 - accuracy: 0.6557 - val_loss: 1.3514 - val_accuracy: 0.5617

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.9258 - accuracy: 0.6832 - val_loss: 1.4297 - val_accuracy: 0.5723

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8661 - accuracy: 0.7039 - val_loss: 1.5569 - val_accuracy: 0.5625

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.7883 - accuracy: 0.7294 - val_loss: 1.6453 - val_accuracy: 0.5563

Epoch 10/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.7396 - accuracy: 0.7454 - val_loss: 1.8108 - val_accuracy: 0.5530

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.5444 - accuracy: 0.4482 - val_loss: 1.3110 - val_accuracy: 0.5318

Epoch 2/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.2143 - accuracy: 0.5725 - val_loss: 1.1781 - val_accuracy: 0.5788

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0864 - accuracy: 0.6179 - val_loss: 1.0967 - val_accuracy: 0.6182

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9877 - accuracy: 0.6532 - val_loss: 1.0662 - val_accuracy: 0.6349

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9084 - accuracy: 0.6836 - val_loss: 1.0635 - val_accuracy: 0.6355

Epoch 6/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8400 - accuracy: 0.7096 - val_loss: 1.0298 - val_accuracy: 0.6503

Epoch 7/10

1360/1563 [=========================>....] - ETA: 0s - loss: 0.7850 - accuracy: 0.7275

IOPub message rate exceeded.

The notebook server will temporarily stop sending output

to the client in order to avoid crashing it.

To change this limit, set the config variable

`--NotebookApp.iopub_msg_rate_limit`.

Current values:

NotebookApp.iopub_msg_rate_limit=1000.0 (msgs/sec)

NotebookApp.rate_limit_window=3.0 (secs)

1563/1563 [==============================] - 7s 4ms/step - loss: 2.3026 - accuracy: 0.0979 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 8/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3026 - accuracy: 0.0984 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 9/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3027 - accuracy: 0.0980 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 10/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3027 - accuracy: 0.0963 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 1/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.2553 - accuracy: 0.1165 - val_loss: 1.8898 - val_accuracy: 0.2509

Epoch 2/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.6832 - accuracy: 0.3369 - val_loss: 1.5876 - val_accuracy: 0.3746

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.5546 - accuracy: 0.3927 - val_loss: 1.5224 - val_accuracy: 0.4041

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4970 - accuracy: 0.4151 - val_loss: 1.4810 - val_accuracy: 0.4294

Epoch 5/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4502 - accuracy: 0.4359 - val_loss: 1.4731 - val_accuracy: 0.4360

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4148 - accuracy: 0.4538 - val_loss: 1.4276 - val_accuracy: 0.4524

Epoch 7/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3866 - accuracy: 0.4661 - val_loss: 1.4130 - val_accuracy: 0.4677

Epoch 8/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.3083 - accuracy: 0.5116 - val_loss: 1.3118 - val_accuracy: 0.5211

Epoch 9/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2433 - accuracy: 0.5391 - val_loss: 1.2759 - val_accuracy: 0.5304

Epoch 10/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.2076 - accuracy: 0.5508 - val_loss: 1.2855 - val_accuracy: 0.5346

313/313 - 1s - loss: 1.2855 - accuracy: 0.5346 - 775ms/epoch - 2ms/step

1-conv-32-nodes-1-dense-1679223660-test-loss

1.2855437994003296

Model: "sequential_5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_8 (Conv2D) (None, 30, 30, 32) 896

activation_8 (Activation) (None, 30, 30, 32) 0

max_pooling2d_5 (MaxPooling (None, 15, 15, 32) 0

2D)

flatten_5 (Flatten) (None, 7200) 0

dense_5 (Dense) (None, 32) 230432

activation_9 (Activation) (None, 32) 0

dense_6 (Dense) (None, 10) 330

=================================================================

Total params: 231,658

Trainable params: 231,658

Non-trainable params: 0

_________________________________________________________________

###################

2-conv-32-nodes-1-dense-1679223829

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 2.2503 - accuracy: 0.2111 - val_loss: 1.7721 - val_accuracy: 0.3251

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.6540 - accuracy: 0.3883 - val_loss: 1.5292 - val_accuracy: 0.4341

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.4134 - accuracy: 0.4890 - val_loss: 1.3531 - val_accuracy: 0.5304

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2283 - accuracy: 0.5641 - val_loss: 1.2487 - val_accuracy: 0.5635

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.0644 - accuracy: 0.6266 - val_loss: 1.2070 - val_accuracy: 0.5858

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9485 - accuracy: 0.6679 - val_loss: 1.2496 - val_accuracy: 0.5877

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8576 - accuracy: 0.6999 - val_loss: 1.2671 - val_accuracy: 0.5888

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7800 - accuracy: 0.7263 - val_loss: 1.2532 - val_accuracy: 0.6054

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7127 - accuracy: 0.7502 - val_loss: 1.3893 - val_accuracy: 0.5796

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6583 - accuracy: 0.7657 - val_loss: 1.4531 - val_accuracy: 0.5952

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.6588 - accuracy: 0.3952 - val_loss: 1.3280 - val_accuracy: 0.5157

Epoch 2/10

373/1563 [======>.......................] - ETA: 4s - loss: 1.2982 - accuracy: 0.5343

IOPub message rate exceeded.

The notebook server will temporarily stop sending output

to the client in order to avoid crashing it.

To change this limit, set the config variable

`--NotebookApp.iopub_msg_rate_limit`.

Current values:

NotebookApp.iopub_msg_rate_limit=1000.0 (msgs/sec)

NotebookApp.rate_limit_window=3.0 (secs)

1563/1563 [==============================] - 8s 5ms/step - loss: 0.5571 - accuracy: 0.8055 - val_loss: 1.0499 - val_accuracy: 0.6668

313/313 - 1s - loss: 1.0499 - accuracy: 0.6668 - 750ms/epoch - 2ms/step

2-conv-32-nodes-1-dense-1679223829-test-loss

1.0499475002288818

Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_9 (Conv2D) (None, 30, 30, 32) 896

activation_10 (Activation) (None, 30, 30, 32) 0

max_pooling2d_6 (MaxPooling (None, 15, 15, 32) 0

2D)

conv2d_10 (Conv2D) (None, 13, 13, 32) 9248

activation_11 (Activation) (None, 13, 13, 32) 0

flatten_6 (Flatten) (None, 5408) 0

dense_7 (Dense) (None, 32) 173088

activation_12 (Activation) (None, 32) 0

dense_8 (Dense) (None, 10) 330

=================================================================

Total params: 183,562

Trainable params: 183,562

Non-trainable params: 0

_________________________________________________________________

###################

1-conv-64-nodes-1-dense-1679223998

Epoch 1/10

1563/1563 [==============================] - 8s 4ms/step - loss: 3.0291 - accuracy: 0.3193 - val_loss: 1.7007 - val_accuracy: 0.4089

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.6126 - accuracy: 0.4399 - val_loss: 1.5950 - val_accuracy: 0.4373

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.4591 - accuracy: 0.4883 - val_loss: 1.6626 - val_accuracy: 0.4245

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.3247 - accuracy: 0.5313 - val_loss: 1.5539 - val_accuracy: 0.4782

Epoch 5/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.1944 - accuracy: 0.5800 - val_loss: 1.3724 - val_accuracy: 0.5335

Epoch 6/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.0994 - accuracy: 0.6162 - val_loss: 1.4128 - val_accuracy: 0.5306

Epoch 7/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.0108 - accuracy: 0.6490 - val_loss: 1.4198 - val_accuracy: 0.5415

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9487 - accuracy: 0.6703 - val_loss: 1.3904 - val_accuracy: 0.5489

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8792 - accuracy: 0.6957 - val_loss: 1.4447 - val_accuracy: 0.5569

Epoch 10/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.8250 - accuracy: 0.7141 - val_loss: 1.5432 - val_accuracy: 0.5576

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 1.7335 - accuracy: 0.3717 - val_loss: 1.4862 - val_accuracy: 0.4531

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.3894 - accuracy: 0.4914 - val_loss: 1.3499 - val_accuracy: 0.5133

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2393 - accuracy: 0.5494 - val_loss: 1.2390 - val_accuracy: 0.5447

Epoch 4/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.1399 - accuracy: 0.5860 - val_loss: 1.1662 - val_accuracy: 0.5763

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2415 - accuracy: 0.5600 - val_loss: 1.2776 - val_accuracy: 0.5549

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.0626 - accuracy: 0.6273 - val_loss: 1.1947 - val_accuracy: 0.5955

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9177 - accuracy: 0.6801 - val_loss: 1.1990 - val_accuracy: 0.5980

Epoch 6/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.7776 - accuracy: 0.7295 - val_loss: 1.2377 - val_accuracy: 0.6060

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6671 - accuracy: 0.7690 - val_loss: 1.4003 - val_accuracy: 0.6010

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.5534 - accuracy: 0.8091 - val_loss: 1.5183 - val_accuracy: 0.6010

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.4814 - accuracy: 0.8342 - val_loss: 1.5385 - val_accuracy: 0.6024

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.4158 - accuracy: 0.8575 - val_loss: 1.8444 - val_accuracy: 0.5952

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 1.5556 - accuracy: 0.4350 - val_loss: 1.2440 - val_accuracy: 0.5538

Epoch 2/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1009 - accuracy: 0.6054 - val_loss: 1.0688 - val_accuracy: 0.6250

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9223 - accuracy: 0.6738 - val_loss: 0.9790 - val_accuracy: 0.6605

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7815 - accuracy: 0.7255 - val_loss: 0.9468 - val_accuracy: 0.6712

Epoch 5/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.6563 - accuracy: 0.7708 - val_loss: 0.9420 - val_accuracy: 0.6888

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.5381 - accuracy: 0.8129 - val_loss: 0.9870 - val_accuracy: 0.6891

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.4338 - accuracy: 0.8490 - val_loss: 1.0926 - val_accuracy: 0.6833

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.3412 - accuracy: 0.8811 - val_loss: 1.2044 - val_accuracy: 0.6769

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.2657 - accuracy: 0.9089 - val_loss: 1.3245 - val_accuracy: 0.6757

Epoch 10/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.2068 - accuracy: 0.9286 - val_loss: 1.4957 - val_accuracy: 0.6714

313/313 - 1s - loss: 1.4957 - accuracy: 0.6714 - 946ms/epoch - 3ms/step

2-conv-64-nodes-1-dense-1679224143-test-loss

1.495668888092041

Model: "sequential_8"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_12 (Conv2D) (None, 30, 30, 64) 1792

activation_15 (Activation) (None, 30, 30, 64) 0

max_pooling2d_8 (MaxPooling (None, 15, 15, 64) 0

2D)

conv2d_13 (Conv2D) (None, 13, 13, 64) 36928

activation_16 (Activation) (None, 13, 13, 64) 0

flatten_8 (Flatten) (None, 10816) 0

dense_11 (Dense) (None, 64) 692288

activation_17 (Activation) (None, 64) 0

dense_12 (Dense) (None, 10) 650

=================================================================

Total params: 731,658

Trainable params: 731,658

Non-trainable params: 0

_________________________________________________________________

###################

"""

print()

The tuned hyperparameter of 4 epochs with the 2-Conv-64-Nodes-1-Dense architecture shows a promising generalisation, given that above Val_Loss plot clearly proofs that only after 4 epochs overfitting satarts to creep in!

#@title ### Additional Wider Sweep, just in case!

## Confirming the already concluded HPO tuned generalisation proofed as well above,

## alas at a huge non-eco-freindly GPU/TPU empact!!

dense_layers = [0, 1, 2]

layer_sizes = [32, 64, 128]

conv_layers = [1, 2, 3]

## Accuracy Logs

"""

1-conv-32-nodes-0-dense-1679181921

Epoch 1/10

1563/1563 [==============================] - 21s 4ms/step - loss: 5.1547 - accuracy: 0.2528 - val_loss: 2.0102 - val_accuracy: 0.2794

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.8490 - accuracy: 0.3568 - val_loss: 1.9008 - val_accuracy: 0.3713

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.7777 - accuracy: 0.3790 - val_loss: 1.7916 - val_accuracy: 0.3769

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.6883 - accuracy: 0.4151 - val_loss: 1.9025 - val_accuracy: 0.3238

Epoch 5/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.6261 - accuracy: 0.4349 - val_loss: 1.7901 - val_accuracy: 0.4150

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.6009 - accuracy: 0.4434 - val_loss: 2.0222 - val_accuracy: 0.3101

Epoch 7/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.5836 - accuracy: 0.4489 - val_loss: 2.0530 - val_accuracy: 0.4002

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.5636 - accuracy: 0.4529 - val_loss: 1.9681 - val_accuracy: 0.4028

Epoch 9/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.5181 - accuracy: 0.4711 - val_loss: 2.1238 - val_accuracy: 0.3902

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.4853 - accuracy: 0.4839 - val_loss: 2.1133 - val_accuracy: 0.3865

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.7468 - accuracy: 0.3903 - val_loss: 1.4931 - val_accuracy: 0.4787

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.3653 - accuracy: 0.5250 - val_loss: 1.3346 - val_accuracy: 0.5312

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2020 - accuracy: 0.5812 - val_loss: 1.1942 - val_accuracy: 0.5865

Epoch 4/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.1156 - accuracy: 0.6139 - val_loss: 1.1416 - val_accuracy: 0.6047

Epoch 5/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.0501 - accuracy: 0.6349 - val_loss: 1.1848 - val_accuracy: 0.5885

Epoch 6/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.9974 - accuracy: 0.6554 - val_loss: 1.0787 - val_accuracy: 0.6280

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.9580 - accuracy: 0.6695 - val_loss: 1.1139 - val_accuracy: 0.6177

Epoch 8/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.9208 - accuracy: 0.6815 - val_loss: 1.1016 - val_accuracy: 0.6290

Epoch 9/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.8945 - accuracy: 0.6908 - val_loss: 1.0676 - val_accuracy: 0.6326

Epoch 10/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.8676 - accuracy: 0.7001 - val_loss: 1.1176 - val_accuracy: 0.6244

313/313 - 1s - loss: 1.1176 - accuracy: 0.6244 - 637ms/epoch - 2ms/step

0.6244000196456909

2-conv-32-nodes-0-dense-1679182093

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 2.5233 - accuracy: 0.1076 - val_loss: 2.3019 - val_accuracy: 0.1042

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.2965 - accuracy: 0.1112 - val_loss: 2.3168 - val_accuracy: 0.1138

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2905 - accuracy: 0.1162 - val_loss: 2.3109 - val_accuracy: 0.1051

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.2869 - accuracy: 0.1133 - val_loss: 2.3124 - val_accuracy: 0.1030

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2790 - accuracy: 0.1180 - val_loss: 2.3269 - val_accuracy: 0.1115

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.1621 - accuracy: 0.1895 - val_loss: 1.9424 - val_accuracy: 0.2890

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.7616 - accuracy: 0.3575 - val_loss: 1.6675 - val_accuracy: 0.4040

Epoch 8/10

1563/1563 [==============================] - 9s 5ms/step - loss: 1.5445 - accuracy: 0.4528 - val_loss: 1.5984 - val_accuracy: 0.4472

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.4046 - accuracy: 0.5020 - val_loss: 1.5554 - val_accuracy: 0.4635

Epoch 10/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.3106 - accuracy: 0.5371 - val_loss: 1.5974 - val_accuracy: 0.4522

Epoch 1/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.6487 - accuracy: 0.4133 - val_loss: 1.3508 - val_accuracy: 0.5143

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2782 - accuracy: 0.5480 - val_loss: 1.2304 - val_accuracy: 0.5633

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1434 - accuracy: 0.5998 - val_loss: 1.1468 - val_accuracy: 0.6001

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.0511 - accuracy: 0.6340 - val_loss: 1.1639 - val_accuracy: 0.5970

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9872 - accuracy: 0.6571 - val_loss: 1.0730 - val_accuracy: 0.6261

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9340 - accuracy: 0.6766 - val_loss: 1.0595 - val_accuracy: 0.6338

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8937 - accuracy: 0.6889 - val_loss: 1.0403 - val_accuracy: 0.6371

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8548 - accuracy: 0.7041 - val_loss: 1.0901 - val_accuracy: 0.6336

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8229 - accuracy: 0.7163 - val_loss: 1.0631 - val_accuracy: 0.6351

Epoch 10/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.7945 - accuracy: 0.7265 - val_loss: 1.0685 - val_accuracy: 0.6416

313/313 - 1s - loss: 1.0685 - accuracy: 0.6416 - 996ms/epoch - 3ms/step

0.6416000127792358

1-conv-64-nodes-0-dense-1679182255

Epoch 1/10

1563/1563 [==============================] - 7s 4ms/step - loss: 4.3008 - accuracy: 0.3094 - val_loss: 1.8540 - val_accuracy: 0.3590

Epoch 2/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.7377 - accuracy: 0.4067 - val_loss: 1.7681 - val_accuracy: 0.3984

Epoch 3/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.6541 - accuracy: 0.4337 - val_loss: 1.7667 - val_accuracy: 0.4161

Epoch 4/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.6008 - accuracy: 0.4529 - val_loss: 1.8395 - val_accuracy: 0.3808

Epoch 5/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.5791 - accuracy: 0.4557 - val_loss: 1.8903 - val_accuracy: 0.4175

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.5313 - accuracy: 0.4762 - val_loss: 1.8959 - val_accuracy: 0.4093

Epoch 7/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4931 - accuracy: 0.4880 - val_loss: 2.0530 - val_accuracy: 0.4155

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4633 - accuracy: 0.5004 - val_loss: 2.2687 - val_accuracy: 0.3246

Epoch 9/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4464 - accuracy: 0.5027 - val_loss: 2.2999 - val_accuracy: 0.3647

Epoch 10/10

1563/1563 [==============================] - 6s 4ms/step - loss: 1.4918 - accuracy: 0.4824 - val_loss: 2.4894 - val_accuracy: 0.3473

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.6667 - accuracy: 0.4212 - val_loss: 1.3539 - val_accuracy: 0.5269

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.2512 - accuracy: 0.5645 - val_loss: 1.2316 - val_accuracy: 0.5736

Epoch 3/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1130 - accuracy: 0.6182 - val_loss: 1.1730 - val_accuracy: 0.5820

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.0251 - accuracy: 0.6458 - val_loss: 1.0827 - val_accuracy: 0.6215

Epoch 5/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9557 - accuracy: 0.6721 - val_loss: 1.0590 - val_accuracy: 0.6341

Epoch 6/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8964 - accuracy: 0.6919 - val_loss: 1.0889 - val_accuracy: 0.6244

Epoch 7/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8477 - accuracy: 0.7072 - val_loss: 1.0492 - val_accuracy: 0.6413

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.8022 - accuracy: 0.7236 - val_loss: 1.0751 - val_accuracy: 0.6382

Epoch 9/10

1563/1563 [==============================] - 6s 4ms/step - loss: 0.7637 - accuracy: 0.7375 - val_loss: 1.0617 - val_accuracy: 0.6427

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7308 - accuracy: 0.7495 - val_loss: 1.1038 - val_accuracy: 0.6377

313/313 - 1s - loss: 1.1038 - accuracy: 0.6377 - 659ms/epoch - 2ms/step

0.6377000212669373

2-conv-64-nodes-0-dense-1679182411

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 2.5559 - accuracy: 0.1100 - val_loss: 2.2866 - val_accuracy: 0.1307

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.3017 - accuracy: 0.1051 - val_loss: 2.3022 - val_accuracy: 0.1017

Epoch 3/10

1563/1563 [==============================] - 9s 6ms/step - loss: 2.2985 - accuracy: 0.1059 - val_loss: 2.3129 - val_accuracy: 0.1026

Epoch 4/10

1563/1563 [==============================] - 9s 5ms/step - loss: 2.2943 - accuracy: 0.1055 - val_loss: 2.3164 - val_accuracy: 0.1030

Epoch 5/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2901 - accuracy: 0.1092 - val_loss: 2.3416 - val_accuracy: 0.1025

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2856 - accuracy: 0.1080 - val_loss: 2.3719 - val_accuracy: 0.1079

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.2793 - accuracy: 0.1127 - val_loss: 2.3433 - val_accuracy: 0.1020

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2755 - accuracy: 0.1151 - val_loss: 2.3720 - val_accuracy: 0.1035

Epoch 9/10

1563/1563 [==============================] - 9s 6ms/step - loss: 2.2677 - accuracy: 0.1176 - val_loss: 2.3660 - val_accuracy: 0.1043

Epoch 10/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.2625 - accuracy: 0.1193 - val_loss: 2.3960 - val_accuracy: 0.1052

Epoch 1/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.5611 - accuracy: 0.4437 - val_loss: 1.3167 - val_accuracy: 0.5275

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2432 - accuracy: 0.5629 - val_loss: 1.2435 - val_accuracy: 0.5627

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1077 - accuracy: 0.6141 - val_loss: 1.0888 - val_accuracy: 0.6183

Epoch 4/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.0049 - accuracy: 0.6493 - val_loss: 1.0898 - val_accuracy: 0.6292

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9142 - accuracy: 0.6830 - val_loss: 0.9716 - val_accuracy: 0.6662

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8419 - accuracy: 0.7100 - val_loss: 0.9763 - val_accuracy: 0.6622

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7893 - accuracy: 0.7275 - val_loss: 0.9875 - val_accuracy: 0.6605

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7420 - accuracy: 0.7444 - val_loss: 1.0231 - val_accuracy: 0.6596

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7027 - accuracy: 0.7577 - val_loss: 0.9961 - val_accuracy: 0.6673

Epoch 10/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.6588 - accuracy: 0.7726 - val_loss: 1.0041 - val_accuracy: 0.6695

313/313 - 1s - loss: 1.0041 - accuracy: 0.6695 - 703ms/epoch - 2ms/step

0.6694999933242798

1-conv-32-nodes-1-dense-1679182578

Epoch 1/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.6233 - accuracy: 0.0987 - val_loss: 2.3026 - val_accuracy: 0.1002

Epoch 2/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3030 - accuracy: 0.0982 - val_loss: 2.3026 - val_accuracy: 0.1001

Epoch 3/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.3026 - accuracy: 0.0992 - val_loss: 2.3027 - val_accuracy: 0.1001

Epoch 4/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3027 - accuracy: 0.0987 - val_loss: 2.3026 - val_accuracy: 0.1001

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.3027 - accuracy: 0.0984 - val_loss: 2.3026 - val_accuracy: 0.1001

Epoch 6/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3027 - accuracy: 0.0978 - val_loss: 2.3025 - val_accuracy: 0.1000

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.3026 - accuracy: 0.1005 - val_loss: 2.3026 - val_accuracy: 0.1001

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.3027 - accuracy: 0.0976 - val_loss: 2.3025 - val_accuracy: 0.1001

Epoch 9/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.3027 - accuracy: 0.0988 - val_loss: 2.3026 - val_accuracy: 0.1001

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.3027 - accuracy: 0.0980 - val_loss: 2.3026 - val_accuracy: 0.1001

Epoch 1/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.3028 - accuracy: 0.0974 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 2/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.3028 - accuracy: 0.0986 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 3/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3028 - accuracy: 0.0990 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.3028 - accuracy: 0.0974 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 5/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.3028 - accuracy: 0.0988 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 2.3028 - accuracy: 0.0978 - val_loss: 2.3026 - val_accuracy: 0.1000

Epoch 7/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.3028 - accuracy: 0.0985 - val_loss: 2.3027 - val_accuracy: 0.1000

Epoch 8/10

1563/1563 [==============================] - 7s 4ms/step - loss: 2.3028 - accuracy: 0.0983 - val_loss: 2.3027 - val_accuracy: 0.1000

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 2.3028 - accuracy: 0.0977 - val_loss: 2.3027 - val_accuracy: 0.1000

Epoch 10/10

1563/1563 [==============================] - 6s 4ms/step - loss: 2.3027 - accuracy: 0.1003 - val_loss: 2.3027 - val_accuracy: 0.1000

313/313 - 1s - loss: 2.3027 - accuracy: 0.1000 - 649ms/epoch - 2ms/step

0.10000000149011612

2-conv-32-nodes-1-dense-1679182735

Epoch 1/10

1563/1563 [==============================] - 10s 5ms/step - loss: 2.2186 - accuracy: 0.2418 - val_loss: 1.8019 - val_accuracy: 0.3444

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.6157 - accuracy: 0.4096 - val_loss: 1.5085 - val_accuracy: 0.4494

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.3860 - accuracy: 0.5027 - val_loss: 1.4561 - val_accuracy: 0.4900

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2114 - accuracy: 0.5698 - val_loss: 1.3658 - val_accuracy: 0.5224

Epoch 5/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.0755 - accuracy: 0.6179 - val_loss: 1.2934 - val_accuracy: 0.5577

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9622 - accuracy: 0.6597 - val_loss: 1.3202 - val_accuracy: 0.5570

Epoch 7/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.8571 - accuracy: 0.6961 - val_loss: 1.3947 - val_accuracy: 0.5668

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7689 - accuracy: 0.7258 - val_loss: 1.4186 - val_accuracy: 0.5740

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.6918 - accuracy: 0.7531 - val_loss: 1.5087 - val_accuracy: 0.5642

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6179 - accuracy: 0.7787 - val_loss: 1.6000 - val_accuracy: 0.5704

Epoch 1/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.8522 - accuracy: 0.3163 - val_loss: 1.4778 - val_accuracy: 0.4528

Epoch 2/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.3261 - accuracy: 0.5218 - val_loss: 1.2327 - val_accuracy: 0.5600

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.1124 - accuracy: 0.6057 - val_loss: 1.1058 - val_accuracy: 0.6002

Epoch 4/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.9971 - accuracy: 0.6508 - val_loss: 1.0136 - val_accuracy: 0.6433

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9062 - accuracy: 0.6821 - val_loss: 1.0097 - val_accuracy: 0.6470

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8355 - accuracy: 0.7059 - val_loss: 0.9584 - val_accuracy: 0.6679

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7683 - accuracy: 0.7307 - val_loss: 0.9725 - val_accuracy: 0.6645

Epoch 8/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.7128 - accuracy: 0.7475 - val_loss: 1.0026 - val_accuracy: 0.6620

Epoch 9/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.6593 - accuracy: 0.7692 - val_loss: 0.9782 - val_accuracy: 0.6731

Epoch 10/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.6034 - accuracy: 0.7892 - val_loss: 1.0309 - val_accuracy: 0.6674

313/313 - 1s - loss: 1.0309 - accuracy: 0.6674 - 689ms/epoch - 2ms/step

0.6674000024795532

1-conv-64-nodes-1-dense-1679182966

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 3.6369 - accuracy: 0.2343 - val_loss: 1.9486 - val_accuracy: 0.2669

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.8225 - accuracy: 0.3168 - val_loss: 1.7487 - val_accuracy: 0.3263

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.6923 - accuracy: 0.3629 - val_loss: 1.7172 - val_accuracy: 0.3758

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.5610 - accuracy: 0.4259 - val_loss: 1.4899 - val_accuracy: 0.4650

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.3544 - accuracy: 0.5147 - val_loss: 1.3720 - val_accuracy: 0.5054

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2233 - accuracy: 0.5687 - val_loss: 1.3365 - val_accuracy: 0.5228

Epoch 7/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.1377 - accuracy: 0.6010 - val_loss: 1.3611 - val_accuracy: 0.5380

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.0731 - accuracy: 0.6233 - val_loss: 1.3338 - val_accuracy: 0.5541

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.0104 - accuracy: 0.6486 - val_loss: 1.4306 - val_accuracy: 0.5397

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9628 - accuracy: 0.6654 - val_loss: 1.3287 - val_accuracy: 0.5612

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 1.8550 - accuracy: 0.3195 - val_loss: 1.6253 - val_accuracy: 0.3923

Epoch 2/10

1563/1563 [==============================] - 7s 4ms/step - loss: 1.4790 - accuracy: 0.4576 - val_loss: 1.3466 - val_accuracy: 0.5040

Epoch 3/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.2783 - accuracy: 0.5348 - val_loss: 1.2481 - val_accuracy: 0.5490

Epoch 4/10

1563/1563 [==============================] - 7s 5ms/step - loss: 1.1582 - accuracy: 0.5833 - val_loss: 1.1992 - val_accuracy: 0.5785

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.0697 - accuracy: 0.6186 - val_loss: 1.1021 - val_accuracy: 0.6074

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9996 - accuracy: 0.6464 - val_loss: 1.1091 - val_accuracy: 0.6078

Epoch 7/10

1563/1563 [==============================] - 7s 4ms/step - loss: 0.9417 - accuracy: 0.6690 - val_loss: 1.0812 - val_accuracy: 0.6221

Epoch 8/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8965 - accuracy: 0.6844 - val_loss: 1.0699 - val_accuracy: 0.6293

Epoch 9/10

1563/1563 [==============================] - 7s 5ms/step - loss: 0.8553 - accuracy: 0.7013 - val_loss: 1.0447 - val_accuracy: 0.6343

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.8234 - accuracy: 0.7119 - val_loss: 1.0882 - val_accuracy: 0.6267

313/313 - 1s - loss: 1.0882 - accuracy: 0.6267 - 959ms/epoch - 3ms/step

0.6266999840736389

2-conv-64-nodes-1-dense-1679183129

Epoch 1/10

1563/1563 [==============================] - 9s 5ms/step - loss: 2.3403 - accuracy: 0.2334 - val_loss: 1.7725 - val_accuracy: 0.3344

Epoch 2/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.6551 - accuracy: 0.3889 - val_loss: 1.6976 - val_accuracy: 0.4087

Epoch 3/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.3768 - accuracy: 0.5077 - val_loss: 1.3147 - val_accuracy: 0.5436

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 1.1631 - accuracy: 0.5908 - val_loss: 1.2500 - val_accuracy: 0.5567

Epoch 5/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.9916 - accuracy: 0.6508 - val_loss: 1.2130 - val_accuracy: 0.5945

Epoch 6/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.8494 - accuracy: 0.7044 - val_loss: 1.2690 - val_accuracy: 0.5839

Epoch 7/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.7040 - accuracy: 0.7535 - val_loss: 1.2990 - val_accuracy: 0.6086

Epoch 8/10

1563/1563 [==============================] - 9s 5ms/step - loss: 0.5752 - accuracy: 0.7996 - val_loss: 1.4441 - val_accuracy: 0.6013

Epoch 9/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.4797 - accuracy: 0.8343 - val_loss: 1.5810 - val_accuracy: 0.5893

Epoch 10/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.4028 - accuracy: 0.8621 - val_loss: 1.7814 - val_accuracy: 0.5776

Epoch 1/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.4460 - accuracy: 0.4770 - val_loss: 1.2692 - val_accuracy: 0.5429

Epoch 2/10

1563/1563 [==============================] - 9s 6ms/step - loss: 1.0334 - accuracy: 0.6333 - val_loss: 0.9871 - val_accuracy: 0.6534

Epoch 3/10

1563/1563 [==============================] - 9s 5ms/step - loss: 0.8014 - accuracy: 0.7206 - val_loss: 0.9429 - val_accuracy: 0.6788

Epoch 4/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.6302 - accuracy: 0.7805 - val_loss: 0.9407 - val_accuracy: 0.6857

Epoch 5/10

1563/1563 [==============================] - 9s 5ms/step - loss: 0.4718 - accuracy: 0.8376 - val_loss: 1.0334 - val_accuracy: 0.6842

Epoch 6/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.3387 - accuracy: 0.8851 - val_loss: 1.1429 - val_accuracy: 0.6751

Epoch 7/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.2302 - accuracy: 0.9229 - val_loss: 1.3528 - val_accuracy: 0.6607

Epoch 8/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.1558 - accuracy: 0.9496 - val_loss: 1.6063 - val_accuracy: 0.6679

Epoch 9/10

1563/1563 [==============================] - 8s 5ms/step - loss: 0.1143 - accuracy: 0.9622 - val_loss: 1.8643 - val_accuracy: 0.6531

Epoch 10/10

1563/1563 [==============================] - 9s 6ms/step - loss: 0.0931 - accuracy: 0.9692 - val_loss: 2.0962 - val_accuracy: 0.6462

313/313 - 1s - loss: 2.0962 - accuracy: 0.6462 - 749ms/epoch - 2ms/step

0.6462000012397766

"""

print()

# Ref: tensorflow.org/tutorials/images/cnn && youtube.com/playlist?list=PLQVvvaa0QuDfhTox0AjmQ6tvTgMBZBEXN

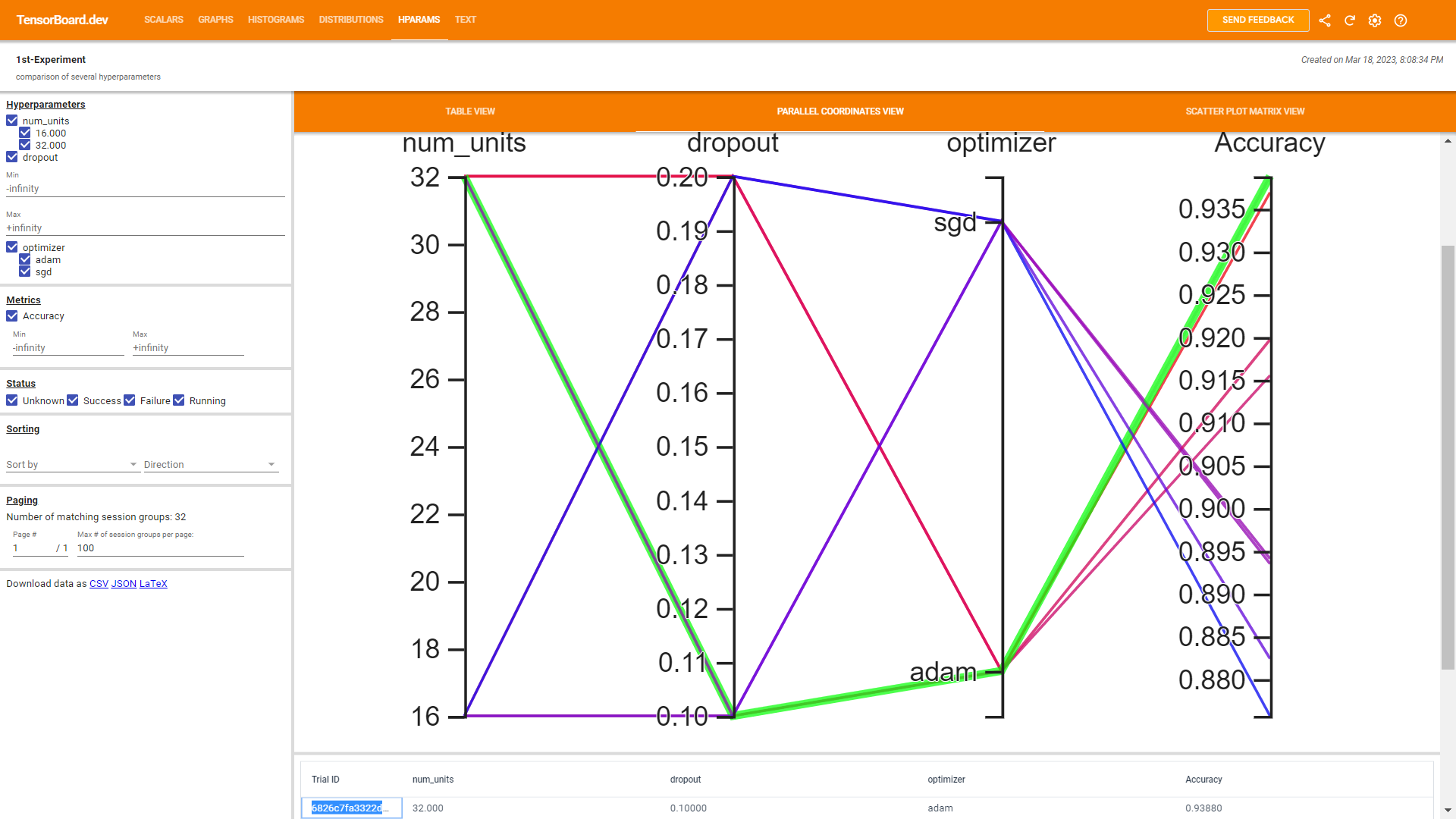

TF2 TB HParams HPO:

Adapting production-ready best practice, and given Pofessor Tim's recent permit to make use of TB (TensorBoard), notwithstanding its HParams dashboard to tune hyperparameters in TensorFlow models. Consideing that when building machine learning models, we need to choose various hyperparameters, such as the dropout rate in a layer or the learning rate. These decisions impact model metrics, such as accuracy. Therefore, an important step in the machine learning workflow is to identify the best hyperparameters for a problem, which often involves experimentation. This process is known as “Hyperparameter Optimization” or “Hyperparameter Tuning”. The HParams dashboard in TensorBoard provides several tools to help with this process of identifying the best experiment or most promising sets of hyperparameters. The below exploration and/or exploitation focuses on the following steps:

- Experiment setup and the HParams experiment summary

- Adapt TensorFlow runs to log hyperparameters and metrics

- Start runs and log them all under one parent directory

- Visualize the results in TensorBoard’s HParams plugin

# Load the TensorBoard notebook extension

%load_ext tensorboard

## Clear any logs from previous runs?

# !rm -rf ./logs/

Import TensorFlow and the TensorBoard HParams plugin:

import tensorflow as tf

from tensorboard.plugins.hparams import api as hp

!pip install -q datasets

# ## c/o: https://github.com/zalandoresearch/fashion-mnist

# # !curl -X GET "https://datasets-server.huggingface.co/first-rows?dataset=fashion_mnist&config=fashion_mnist&split=train"

# # !curl -X GET "https://datasets-server.huggingface.co/first-rows?dataset=fashion_mnist&config=fashion_mnist&split=test"

# # dataset = load_dataset("fashion_mnist")

# # ds = tf.keras.datasets.fashion_mnist

from datasets import load_dataset

# # !curl -X GET "https://datasets-server.huggingface.co/first-rows?dataset=svhn&config=cropped_digits&split=train"

# # !curl -X GET "https://datasets-server.huggingface.co/first-rows?dataset=svhn&config=cropped_digits&split=test"

# dataset = load_dataset("svhn", 'cropped_digits')

ds = tf.keras.datasets.mnist # svhn_cropped

## SVHN Ref: Yuval Netzer, Tao Wang, Adam Coates, Alessandro Bissacco, Bo Wu, Andrew Y. Ng Reading Digits in Natural Images with Unsupervised Feature Learning NIPS Workshop on Deep Learning and Unsupervised Feature Learning 2011. http://ufldl.stanford.edu/housenumbers

# from datasets import load_dataset

# dataset = load_dataset("svhn", 'cropped_digits')

(x_train, y_train),(x_test, y_test) = ds.load_data()

# x_train, y_train, x_test, y_test = dataset.load_data()

# x_train, y_train, x_test, y_test = dataset #.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

11490434/11490434 [==============================] - 0s 0us/step

1. Experiment setup and summary

List the values to try, and log an experiment configuration to TensorBoard; while experimenting with below hyperparameters in the model:

- Number of units in the first dense layer

- Dropout rate in the dropout layer

- Optimizer

HP_NUM_UNITS = hp.HParam('num_units', hp.Discrete([16, 32]))

HP_DROPOUT = hp.HParam('dropout', hp.RealInterval(0.1, 0.2))

HP_OPTIMIZER = hp.HParam('optimizer', hp.Discrete(['adam', 'sgd']))

METRIC_ACCURACY = 'accuracy'

with tf.summary.create_file_writer('logs/hparam_tuning').as_default():

hp.hparams_config(

hparams=[HP_NUM_UNITS, HP_DROPOUT, HP_OPTIMIZER],

metrics=[hp.Metric(METRIC_ACCURACY, display_name='Accuracy')],

)

2. Adapt TensorFlow runs to log hyperparameters and metrics

The model will be quite simple: two dense layers with a dropout layer between them. The training code will look familiar, although the hyperparameters are no longer hardcoded. Instead, the hyperparameters are provided in a dictionary and used throughout the training function:

def train_test_model(hparams):

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(hparams[HP_NUM_UNITS], activation=tf.nn.relu),

tf.keras.layers.Dropout(hparams[HP_DROPOUT]),

tf.keras.layers.Dense(10, activation=tf.nn.softmax),

])

model.compile(

optimizer=hparams[HP_OPTIMIZER],

loss='sparse_categorical_crossentropy',

metrics=['accuracy'],

)

model.fit(x_train, y_train, epochs=1) # Run with 1 epoch to speed things up for demo purposes

_, accuracy = model.evaluate(x_test, y_test)

return accuracy

For each run, log an hparams summary with the hyperparameters and final accuracy:

def run(run_dir, hparams):

with tf.summary.create_file_writer(run_dir).as_default():

hp.hparams(hparams) # record the values used in this trial

accuracy = train_test_model(hparams)

tf.summary.scalar(METRIC_ACCURACY, accuracy, step=1)

When training Keras models, we can use callbacks instead of writing these directly:

model.fit(

...,

callbacks=[

tf.keras.callbacks.TensorBoard(logdir), # log metrics

hp.KerasCallback(logdir, hparams), # log hparams

],

)

3. Start runs and log them all under one parent directory

Try multiple experiments, training each one with a different set of hyperparameters; use a grid search: try all combinations of the discrete parameters and just the lower and upper bounds of the real-valued parameter, which will take a few minutes:

# session_num = 0

# for num_units in HP_NUM_UNITS.domain.values:

# for dropout_rate in (HP_DROPOUT.domain.min_value, HP_DROPOUT.domain.max_value):

# for optimizer in HP_OPTIMIZER.domain.values:

# hparams = {

# HP_NUM_UNITS: num_units,

# HP_DROPOUT: dropout_rate,

# HP_OPTIMIZER: optimizer,

# }

# run_name = "run-%d" % session_num

# print('--- Starting trial: %s' % run_name)

# print({h.name: hparams[h] for h in hparams})

# run('logs/hparam_tuning/' + run_name, hparams)

# session_num += 1

"""

--- Starting trial: run-0

{'num_units': 16, 'dropout': 0.1, 'optimizer': 'adam'}

1875/1875 [==============================] - 5s 2ms/step - loss: 0.5425 - accuracy: 0.8379

313/313 [==============================] - 1s 2ms/step - loss: 0.2826 - accuracy: 0.9197

--- Starting trial: run-1

{'num_units': 16, 'dropout': 0.1, 'optimizer': 'sgd'}

1875/1875 [==============================] - 5s 2ms/step - loss: 1.0157 - accuracy: 0.6974

313/313 [==============================] - 1s 2ms/step - loss: 0.4662 - accuracy: 0.8824

--- Starting trial: run-2

{'num_units': 16, 'dropout': 0.2, 'optimizer': 'adam'}

1875/1875 [==============================] - 4s 2ms/step - loss: 0.6900 - accuracy: 0.7843

313/313 [==============================] - 1s 2ms/step - loss: 0.2978 - accuracy: 0.9155

--- Starting trial: run-3

{'num_units': 16, 'dropout': 0.2, 'optimizer': 'sgd'}

1875/1875 [==============================] - 5s 2ms/step - loss: 1.1528 - accuracy: 0.6327

313/313 [==============================] - 1s 2ms/step - loss: 0.5148 - accuracy: 0.8757

--- Starting trial: run-4

{'num_units': 32, 'dropout': 0.1, 'optimizer': 'adam'}

1875/1875 [==============================] - 5s 2ms/step - loss: 0.4160 - accuracy: 0.8794

313/313 [==============================] - 1s 2ms/step - loss: 0.2105 - accuracy: 0.9388

--- Starting trial: run-5

{'num_units': 32, 'dropout': 0.1, 'optimizer': 'sgd'}

1875/1875 [==============================] - 6s 3ms/step - loss: 0.8231 - accuracy: 0.7644

313/313 [==============================] - 1s 2ms/step - loss: 0.3887 - accuracy: 0.8935

--- Starting trial: run-6

{'num_units': 32, 'dropout': 0.2, 'optimizer': 'adam'}

1875/1875 [==============================] - 7s 3ms/step - loss: 0.4723 - accuracy: 0.8565

313/313 [==============================] - 1s 2ms/step - loss: 0.2170 - accuracy: 0.9369

--- Starting trial: run-7

{'num_units': 32, 'dropout': 0.2, 'optimizer': 'sgd'}

1875/1875 [==============================] - 4s 2ms/step - loss: 0.9542 - accuracy: 0.7115

313/313 [==============================] - 1s 2ms/step - loss: 0.4270 - accuracy: 0.8941

"""

print()

# ## Plot HParams "Parallel Coordinates View" by calling tensorboard.plugins.hparams.api.plot

# # Import the hparams module from tensorboard.plugins.hparams

# from tensorboard.plugins.hparams import api as hp

# # import tensorboard.plugins.hparams.api_pb2 as hp2

# # # Define some hyperparameters and their values

# HP_NUM_UNITS = hp.HParam('num_units', hp.Discrete([16, 32]))

# HP_DROPOUT = hp.HParam('dropout', hp.RealInterval(0.1, 0.2))

# HP_OPTIMIZER = hp.HParam('optimizer', hp.Discrete(['adam', 'sgd']))

# # # Create a list of hyperparameters

# hparams = [HP_NUM_UNITS, HP_DROPOUT, HP_OPTIMIZER]

# # Create a list of SessionGroup objects

# session_groups = []

# # session_num = 0

# for num_units in HP_NUM_UNITS.domain.values: